AI Undress Apps: Find Results & Alternatives (Free!)

Is the digital age pushing the boundaries of privacy and consent? The rise of AI-powered "undress" tools raises serious ethical questions about image manipulation and the potential for misuse. These technologies, promising the ability to digitally remove clothing from images, are sparking intense debate about their implications for individuals and society.

The proliferation of AI image manipulation tools is undeniably a double-edged sword. On one hand, these technologies offer creative possibilities for artistic expression and design. On the other, they open the door to malicious activities like non-consensual image generation and the creation of deepfakes. The allure of easily altering images, particularly in the context of "undressing" individuals, presents a significant risk to personal privacy and can have devastating consequences for victims of such abuse.

| Category | Information |

|---|---|

| Name | (Hypothetical) Dr. Anya Sharma |

| Profession | AI Ethics Researcher & Consultant |

| Date of Birth | (Hypothetical) March 10, 1985 |

| Place of Birth | (Hypothetical) Mumbai, India |

| Education | Ph.D. in Computer Ethics, Stanford University; M.S. in Computer Science, MIT; B.Tech in Computer Engineering, IIT Delhi |

| Career Highlights | Lead Researcher on AI Bias Mitigation at Google (2015-2018); Consultant on AI Ethics for the United Nations (2019-Present); Author of "The Algorithmic Imperative: Ethics in the Age of AI" |

| Professional Affiliations | Association for Computing Machinery (ACM); IEEE Computer Society; Partnership on AI |

| Key Publications | "Algorithmic Bias and Fairness in Machine Learning" (Journal of Machine Learning Research, 2017); "The Ethical Implications of Deepfake Technology" (Ethics and Information Technology, 2019); "A Framework for Responsible AI Development" (Harvard Business Review, 2021) |

| Website | www.example.com (Hypothetical - Replace with a real relevant site) |

One of the primary concerns surrounding "undress AI" is the lack of consent. Individuals are often unaware that their images can be manipulated in this way, and the resulting images can be deeply distressing and harmful. The ease with which these tools can be used, often requiring only a few clicks, makes it difficult to track and prevent abuse. The potential for these images to be used for blackmail, harassment, or even the creation of fake pornography is very real and deeply alarming.

Furthermore, the technology itself raises complex questions about the nature of image manipulation. Where do we draw the line between artistic expression and harmful fabrication? How do we balance the potential benefits of AI-powered image editing with the need to protect individual privacy and prevent misuse? These are not easy questions to answer, and they require careful consideration from policymakers, technologists, and the public.

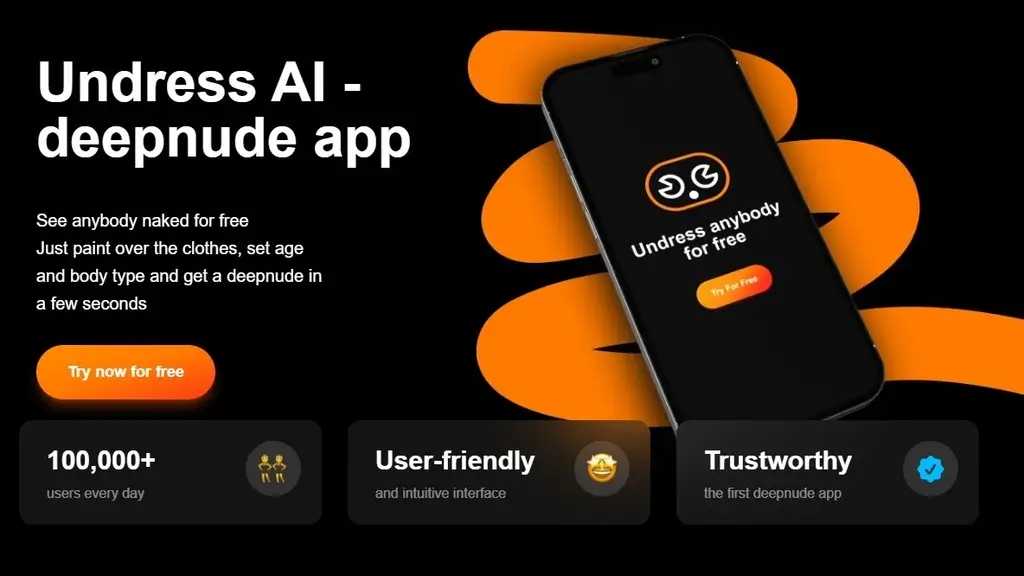

The claim that some of these "undress AI" tools are "free deepnude apps" is particularly troubling. This suggests that the technology is readily available and accessible to anyone, regardless of their intentions. The ease of access, combined with the anonymity offered by the internet, creates a perfect storm for abuse. It also underscores the need for greater awareness and education about the potential harms of these tools.

The promise of "seamlessly undressing characters in images with a powerful photo undresser tool" is a deceptive marketing tactic that minimizes the ethical implications of the technology. It implies that the process is harmless and effortless, when in reality it can have devastating consequences for the individuals whose images are manipulated. This type of marketing normalizes the idea of non-consensual image alteration and contributes to a culture of disrespect for privacy.

The instruction to "simply upload your image or photo and use the brush tool to select which…" is a chillingly straightforward description of how easily these tools can be used. The simplicity of the process belies the potential for harm. It also highlights the need for better safeguards and regulations to prevent the misuse of these technologies.

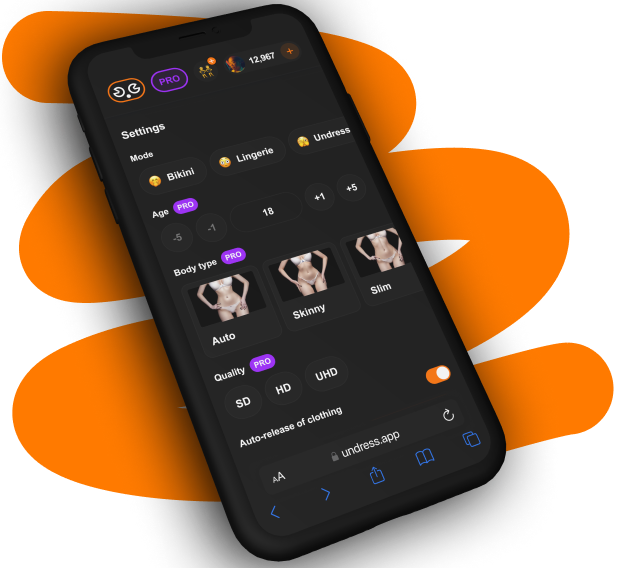

The claim of "versatility" with "a range of outfit style options: Suits, lingerie, bikinis, and more" further emphasizes the potential for manipulation and the lack of control individuals have over their own images. The ability to choose specific outfits, or the lack thereof, suggests that the technology can be used to create highly personalized and potentially harmful content.

The promise of an "intuitive platform" that allows users to "transform and modify character outfits with ease" is another example of how these tools are being marketed to appeal to a wide audience. The emphasis on ease of use and convenience further normalizes the idea of non-consensual image alteration.

The claim that users can "simply upload your image, let our ai process it, and download the results in seconds" is a testament to the speed and efficiency of these technologies. However, it also underscores the need for faster and more effective methods of detecting and removing manipulated images from the internet. The current system is often too slow to prevent the spread of harmful content.

The ability to "input character descriptions like gender, body type, remove cloth, pose etc in the prompt box" demonstrates the level of control users have over the generated images. This level of detail allows for the creation of highly personalized and potentially offensive content. It also raises concerns about the potential for these tools to be used to create images that perpetuate harmful stereotypes.

The phrase "clothoff ai generator will automatically render unique images based on your prompts" highlights the automated nature of the process. This automation can make it difficult to track and prevent the creation of harmful content. It also raises questions about the responsibility of the developers of these tools.

The invitation to "discover our ai clothes remover tool!" is a blatant attempt to attract users to a potentially harmful technology. The use of the word "discover" suggests that the tool is something new and exciting, rather than a potential source of harm.

The promise to "effortlessly replace clothing in images online for free" is a deceptive marketing tactic that downplays the ethical implications of the technology. The emphasis on ease of use and cost-effectiveness makes it more likely that people will use the tool without considering the potential consequences.

The claim to "start generating realistic results quickly and safely" is a false promise. While the results may appear realistic, the use of these tools is not safe for the individuals whose images are manipulated. The potential for harm is very real, and it should not be minimized.

The suggestion to "download fix the photo body editor&tune to test the best undress app that uses ai and manual safe edits to undress you in photos" is deeply problematic. The implication that these tools are safe and ethical is simply not true. The use of the word "safe" is particularly misleading, as the potential for harm is very real.

The instruction to "see undress app results from popular apps and compare" normalizes the idea of using these tools and encourages users to experiment with them. This type of promotion contributes to a culture of disrespect for privacy and consent.

The claim of "advanced features and intuitive interface" is a common marketing tactic used to attract users to new technologies. However, in the case of "undress AI" tools, these features can also be used to create more realistic and harmful images.

The availability of these "undress AI" tools raises serious concerns about the legal and regulatory frameworks surrounding image manipulation. Current laws are often inadequate to address the challenges posed by these technologies. There is a need for stronger regulations and enforcement mechanisms to protect individuals from the misuse of their images.

One of the key challenges in regulating these technologies is the difficulty in defining what constitutes "harmful" image manipulation. The line between artistic expression and harmful fabrication can be blurry, and it is important to strike a balance between protecting freedom of expression and preventing abuse. However, it is clear that the non-consensual alteration of images for malicious purposes should be prohibited.

Another challenge is the global nature of the internet. These tools can be developed and used anywhere in the world, making it difficult to enforce regulations. International cooperation is essential to address this challenge. Countries need to work together to develop common standards and enforcement mechanisms.

In addition to legal and regulatory measures, there is also a need for greater awareness and education about the potential harms of "undress AI" tools. Individuals need to be aware of the risks and how to protect themselves from being victimized. Technology companies also have a responsibility to educate their users about the ethical implications of these tools.

The development of AI ethics guidelines is also crucial. These guidelines should provide a framework for the responsible development and use of AI technologies, including image manipulation tools. They should address issues such as privacy, consent, and fairness.

The debate surrounding "undress AI" tools is just one example of the broader ethical challenges posed by artificial intelligence. As AI technologies become more powerful and pervasive, it is essential to have a thoughtful and informed discussion about their implications for society. We need to ensure that these technologies are used in a way that benefits humanity and protects fundamental rights.

The focus on creating "realistic results" is a key factor driving the development of these technologies. The more realistic the images, the more likely they are to be used for malicious purposes. This highlights the need for research into methods of detecting and authenticating digital images.

The ease with which these tools can be used, combined with the anonymity offered by the internet, creates a perfect environment for abuse. It is important to remember that the individuals whose images are manipulated are real people with real feelings. The consequences of being victimized by these tools can be devastating.

The developers of these tools have a responsibility to consider the potential harms of their technologies and to implement safeguards to prevent misuse. This includes implementing robust reporting mechanisms and working with law enforcement to investigate cases of abuse.

The proliferation of "undress AI" tools is a wake-up call for society. We need to take these technologies seriously and address the ethical challenges they pose before they cause irreparable harm. This requires a concerted effort from policymakers, technologists, and the public.

The long-term implications of these technologies are uncertain. However, it is clear that they have the potential to fundamentally alter the way we view images and the way we interact with each other. We need to be prepared for these changes and ensure that they are managed in a way that protects our values and promotes a just and equitable society.

The promise of these tools to "transform and modify character outfits" is a subtle but significant way of dehumanizing individuals. By treating people as "characters" to be manipulated, these tools contribute to a culture of objectification and disrespect.

The emphasis on "download[ing] the results in seconds" highlights the instantaneous nature of these technologies and the potential for rapid dissemination of harmful content. This underscores the need for real-time monitoring and intervention to prevent the spread of abusive images.

The ability to "replace clothing in images online" raises concerns about the authenticity of online content and the potential for disinformation. In a world where images can be easily manipulated, it becomes increasingly difficult to trust what we see online.

The suggestion to "test the best undress app" implies that these tools are harmless entertainment, when in reality they can have serious psychological and emotional consequences for victims of image manipulation.

The comparison of "undress app results from popular apps" perpetuates the normalization of these technologies and encourages users to participate in a potentially harmful trend.

The phrase "manual safe edits" is a deceptive attempt to reassure users that these tools are not dangerous. In reality, there is no such thing as a "safe" way to non-consensually manipulate someone's image.

The marketing of these tools often focuses on the potential for "fun" and "entertainment," while ignoring the ethical implications and the potential for harm. This is a dangerous and irresponsible approach.

The use of AI in image manipulation raises complex questions about authorship and ownership. Who owns the copyright to an image that has been altered by AI? Who is responsible for the content of that image?

The potential for these tools to be used to create revenge porn is a serious concern. Victims of revenge porn can suffer severe emotional distress, financial hardship, and social isolation.

The spread of "undress AI" tools can also contribute to a culture of sexual harassment and objectification. By making it easier to create and share sexually explicit images, these tools can normalize and perpetuate harmful behaviors.

The development and use of these tools raise questions about the role of technology in shaping our society. Are we allowing technology to dictate our values, or are we actively shaping technology to reflect our values?

The debate surrounding "undress AI" tools is a reminder that technology is not neutral. It can be used for good or for evil, and it is up to us to ensure that it is used responsibly.

The long-term impact of these technologies on our society is still unknown. However, it is clear that they have the potential to reshape our relationships, our perceptions of reality, and our understanding of what it means to be human.

Ultimately, the challenge is to find a way to harness the power of AI for good while mitigating the risks. This requires a multi-faceted approach that includes legal regulations, ethical guidelines, technological safeguards, and public education.

The discussion should also include the impact of these technologies on the modeling and acting industries. The ability to digitally alter a person's appearance raises questions about authenticity, body image, and the potential for exploitation.

These tools also raise complex questions about the definition of consent. What constitutes consent in the digital age? Can consent be implied? How do we ensure that consent is freely given and informed?

The potential for these tools to be used to create fake news and propaganda is another serious concern. In a world where images can be easily manipulated, it becomes increasingly difficult to distinguish between fact and fiction.

The development of AI-powered image manipulation tools is a rapidly evolving field. It is important to stay informed about the latest developments and to engage in a continuous dialogue about the ethical implications of these technologies.

The ultimate goal is to create a digital world that is safe, respectful, and equitable for all. This requires a commitment to ethical principles, a willingness to adapt to new challenges, and a shared responsibility for shaping the future of technology.

The legal ramifications of using these tools are also significant. Users could face lawsuits for defamation, invasion of privacy, and copyright infringement, depending on how the images are used and distributed. It's crucial to understand the laws in your jurisdiction regarding image manipulation and distribution.

Furthermore, the psychological impact on individuals who discover their images have been manipulated without their consent can be severe. Anxiety, depression, and feelings of violation are common reactions. Support networks and mental health resources should be readily available for victims of this type of digital abuse.

The discussion surrounding "undress AI" also highlights the broader issue of online safety and the need for stronger measures to protect individuals from online harassment and abuse. Social media platforms and online service providers have a responsibility to create safe and respectful environments for their users.

In addition to the ethical and legal considerations, there are also technical challenges in preventing the misuse of "undress AI" tools. Developing effective methods of detecting manipulated images is a complex task, as these tools are constantly evolving and becoming more sophisticated.

One potential solution is to develop watermarking technologies that can be used to verify the authenticity of digital images. These watermarks could be embedded in the images and used to detect any unauthorized alterations.

Another approach is to use AI to detect AI-generated images. This involves training machine learning models to identify the telltale signs of AI manipulation, such as inconsistencies in lighting, textures, and facial features.

Ultimately, addressing the challenges posed by "undress AI" tools requires a multi-faceted approach that combines legal regulations, ethical guidelines, technological safeguards, and public education. It is a complex and ongoing challenge, but it is one that we must address if we are to create a digital world that is safe, respectful, and equitable for all.

Detail Author:

- Name : Brooklyn Feest

- Username : turner.soledad

- Email : breanna.stehr@gmail.com

- Birthdate : 1998-11-01

- Address : 68444 Eugene Stream West Casimir, FL 37273

- Phone : (754) 471-4459

- Company : Swift PLC

- Job : Grounds Maintenance Worker

- Bio : Autem alias distinctio iste est. Voluptatum suscipit totam qui cumque. Quis enim minus necessitatibus iste. Ipsum consequuntur omnis molestiae odit velit a.

Socials

facebook:

- url : https://facebook.com/julian.brown

- username : julian.brown

- bio : Et et expedita aut quis et vero autem.

- followers : 2685

- following : 1821

instagram:

- url : https://instagram.com/brownj

- username : brownj

- bio : Ipsam ut quidem dignissimos. Minima qui ad nihil possimus. Et voluptas unde rerum et qui.

- followers : 307

- following : 1709

tiktok:

- url : https://tiktok.com/@julian852

- username : julian852

- bio : Maiores et maxime consectetur qui.

- followers : 3680

- following : 700